INFO

Below, pne stands for Promptulate, which is the nickname for Promptulate. The p and e represent the beginning and end of Promptulate, respectively, and n stands for 9, which is a shorthand for the nine letters between p and e.

Overview

Promptulate is an AI Agent application development framework crafted by Cogit Lab, which offers developers an extremely concise and efficient way to build Agent applications through a Pythonic development paradigm. The core philosophy of Promptulate is to borrow and integrate the wisdom of the open-source community, incorporating the highlights of various development frameworks to lower the barrier to entry and unify the consensus among developers. With Promptulate, you can manipulate components like LLM, Agent, Tool, RAG, etc., with the most succinct code, as most tasks can be easily completed with just a few lines of code. 🚀

💡 Features

- 🐍 Pythonic Code Style: Embraces the habits of Python developers, providing a Pythonic SDK calling approach, putting everything within your grasp with just one

pne.chatfunction to encapsulate all essential functionalities. - 🧠 Model Compatibility: Supports nearly all types of large models on the market and allows for easy customization to meet specific needs.

- 🕵️♂️ Diverse Agents: Offers various types of Agents, such as WebAgent, ToolAgent, CodeAgent, etc., capable of planning, reasoning, and acting to handle complex problems. Atomize the Planner and other components to simplify the development process.

- 🔗 Low-Cost Integration: Effortlessly integrates tools from different frameworks like LangChain, significantly reducing integration costs.

- 🔨 Functions as Tools: Converts any Python function directly into a tool usable by Agents, simplifying the tool creation and usage process.

- 🪝 Lifecycle and Hooks: Provides a wealth of Hooks and comprehensive lifecycle management, allowing the insertion of custom code at various stages of Agents, Tools, and LLMs.

- 💻 Terminal Integration: Easily integrates application terminals, with built-in client support, offering rapid debugging capabilities for prompts.

- ⏱️ Prompt Caching: Offers a caching mechanism for LLM Prompts to reduce repetitive work and enhance development efficiency.

- 🤖 Powerful OpenAI Wrapper: With pne, you no longer need to use the openai sdk, the core functions can be replaced with pne.chat, and provides enhanced features to simplify development difficulty.

- 🧰 Streamlit Component Integration: Quickly prototype and provide many out-of-the-box examples and reusable streamlit components.

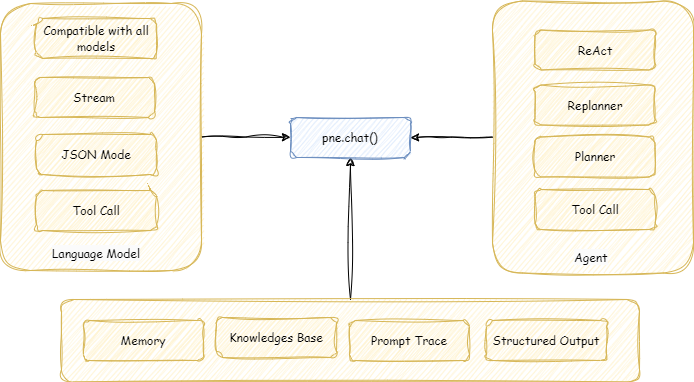

The following diagram shows the core architecture of promptulate:

The core concept of Promptulate is we hope to provide a simple, pythonic and efficient way to build AI applications, which means you don't need to spend a lot of time learning the framework. We hope to use pne.chat() to do most of the works, and you can easily build any AI application with just a few lines of code.

🤖 Supported Base Models

Promptulate integrates the capabilities of litellm, supporting nearly all types of large models on the market, including but not limited to the following models:

| Provider | Completion | Streaming | Async Completion | Async Streaming | Async Embedding | Async Image Generation |

|---|---|---|---|---|---|---|

| openai | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| azure | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| aws - sagemaker | ✅ | ✅ | ✅ | ✅ | ✅ | |

| aws - bedrock | ✅ | ✅ | ✅ | ✅ | ✅ | |

| google - vertex_ai [Gemini] | ✅ | ✅ | ✅ | ✅ | ||

| google - palm | ✅ | ✅ | ✅ | ✅ | ||

| google AI Studio - gemini | ✅ | ✅ | ||||

| mistral ai api | ✅ | ✅ | ✅ | ✅ | ✅ | |

| cloudflare AI Workers | ✅ | ✅ | ✅ | ✅ | ||

| cohere | ✅ | ✅ | ✅ | ✅ | ✅ | |

| anthropic | ✅ | ✅ | ✅ | ✅ | ||

| huggingface | ✅ | ✅ | ✅ | ✅ | ✅ | |

| replicate | ✅ | ✅ | ✅ | ✅ | ||

| together_ai | ✅ | ✅ | ✅ | ✅ | ||

| openrouter | ✅ | ✅ | ✅ | ✅ | ||

| ai21 | ✅ | ✅ | ✅ | ✅ | ||

| baseten | ✅ | ✅ | ✅ | ✅ | ||

| vllm | ✅ | ✅ | ✅ | ✅ | ||

| nlp_cloud | ✅ | ✅ | ✅ | ✅ | ||

| aleph alpha | ✅ | ✅ | ✅ | ✅ | ||

| petals | ✅ | ✅ | ✅ | ✅ | ||

| ollama | ✅ | ✅ | ✅ | ✅ | ||

| deepinfra | ✅ | ✅ | ✅ | ✅ | ||

| perplexity-ai | ✅ | ✅ | ✅ | ✅ | ||

| Groq AI | ✅ | ✅ | ✅ | ✅ | ||

| anyscale | ✅ | ✅ | ✅ | ✅ | ||

| voyage ai | ✅ | |||||

| xinference [Xorbits Inference] | ✅ |

The powerful model support of pne allows you to easily build any third-party model calls.

Now let's see how to run local llama3 models of ollama with pne.

import promptulate as pne

resp: str = pne.chat(model="ollama/llama2", messages=[{"content": "Hello, how are you?", "role": "user"}])Use provider/model_name to call the model, and you can easily build any third-party model calls.

For more models, please visit the litellm documentation.

You can also see how to use pne.chat() in the Getting Started/Official Documentation.

📗 Related Documentation

- Getting Started/Official Documentation

- Current Development Plan

- Contributing/Developer's Manual

- Frequently Asked Questions

- PyPI Repository

📝 Examples

Build a chatbot using pne+streamlit to chat with GitHub repo

Build a math application with agent [Steamlit, ToolAgent, Hooks].

A Mulitmodal Robot Agent framework of ROS2 and Promptulate [Agent]

Use streamlit and pne to compare different model a playground. [Streamlit]

For more detailed information, please check the Quick Start.

📚 Design Principles

The design principles of the pne framework include modularity, extensibility, interoperability, robustness, maintainability, security, efficiency, and usability.

- Modularity refers to using modules as the basic unit, allowing for easy integration of new components, models, and tools.

- Extensibility refers to the framework's ability to handle large amounts of data, complex tasks, and high concurrency.

- Interoperability means the framework is compatible with various external systems, tools, and services and can achieve seamless integration and communication.

- Robustness indicates the framework has strong error handling, fault tolerance, and recovery mechanisms to ensure reliable operation under various conditions.

- Security implies the framework has implemented strict measures to protect against unauthorized access and malicious behavior.

- Efficiency is about optimizing the framework's performance, resource usage, and response times to ensure a smooth and responsive user experience.

- Usability means the framework uses user-friendly interfaces and clear documentation, making it easy to use and understand.

Following these principles and applying the latest artificial intelligence technologies, pne aims to provide a powerful and flexible framework for creating automated agents.

💌 Contact

For more information, please contact: zeeland4work@gmail.com

⭐ Contribution

We appreciate your interest in contributing to our open-source initiative. We have provided a Developer's Guide outlining the steps to contribute to Promptulate. Please refer to this guide to ensure smooth collaboration and successful contributions. Additionally, you can view the Current Development Plan to see the latest development progress 🤝🚀